Say Goodbye to Extra CodeBuild Projects: AWS CodePipeline’s New Commands Action Explained

Until Now, if you wanted to run AWS CLI commands, third-party CLI commands, or simply invoke an API, you had to create a CodeBuild project, configure the project with the appropriate commands, and add a CodeBuild action to your pipeline to run the project.

Clearly Users had to deal with 2 components CodePipeline and CodeBuild and of course learn the internals for both in order to use the.

This blog explains about CodePipeline’s Command Action,

a new update to Codepipeline, which enables you to easily run shell commands as part of your pipeline execution.With the Commands action, you can run AWS CLI, third-party tools, or any shell commands.

In the end we will also discuss do we really don’t need codeBuild or where could be the situations we can use codeBuild where command action would not suffice.

Motivation

- AWS released a new update on Oct 4, 2024 for CodePipeline.

- AWS CodePipeline introduces new general purpose compute action

Prerequisites

- Basic working of CodePipeline, CodeBuild (as this blog will majorly focus only on the update)

- Radical knowledge of Terraform

- Understanding of core AWS and its operations

Understanding CodePipeline Command Action

- In the most basic essence

allows you to run shell commands in a virtual compute instance. - When it runs, the commands you set up are executed in their seperate container.

- Any files or data needed from earlier steps (input artifacts) in the pipeline are available for use in this environment. The best part is you don’t need to set up a separate CodeBuild project to use it.

What happens behind the scenes of Command Action

- CODEBUILD is STILL BEING USED !!!

Its just that whole process of creating codeBuild project first and then adding as action to CodePipeline has been abstracted by this action.

- Since it relies on CodeBuild resources, any builds triggered by the Commands action will count toward your CodeBuild account’s build limits, including timeout of build which is 55 minutes and the number of concurrent builds allowed.

- Note: The Commands action runs CodeBuild managed on-demand EC2 compute, and uses an Amazon Linux 2023 standard 5.0 image.

Some caveats for Command action:

- All command formats are supported except multi-line formats.

- The commands action is not supported for cross-account or cross-Region actions.

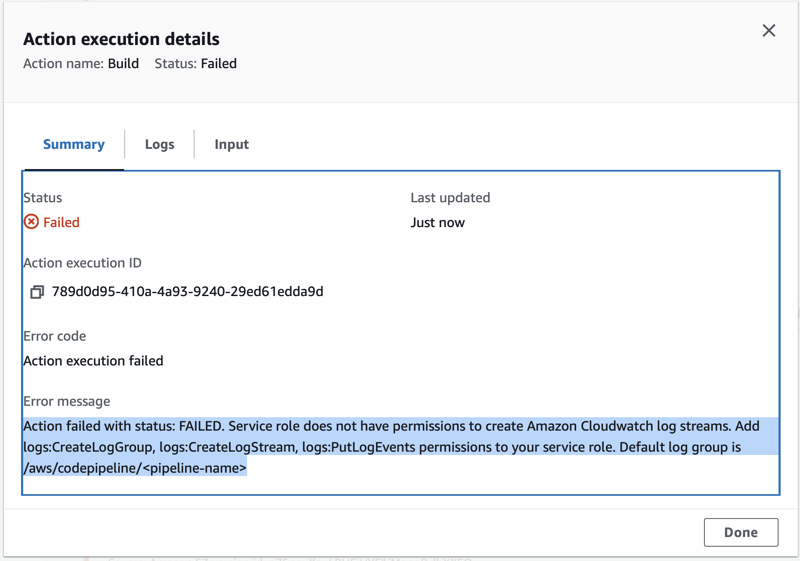

- When CodePipeline runs the action, CodePipeline creates a log group using the name of the pipeline hence The command action needs the following permission :

logs:CreateLogGroup

logs:CreateLogStream

logs:PutLogEvents

- Because the isolated build environment is used at the account level, an instance might be reused for another pipeline execution.

Let’s see how Command Action works !!!

- We will be creating a simple CD pipeline which creates terraform resources in our AWS account

- The basic premise of this pipeline is exactly same my previous blog.

Upload terraform zip to s3 -> CodePipeline is triggered -> Command action runs terraform apply

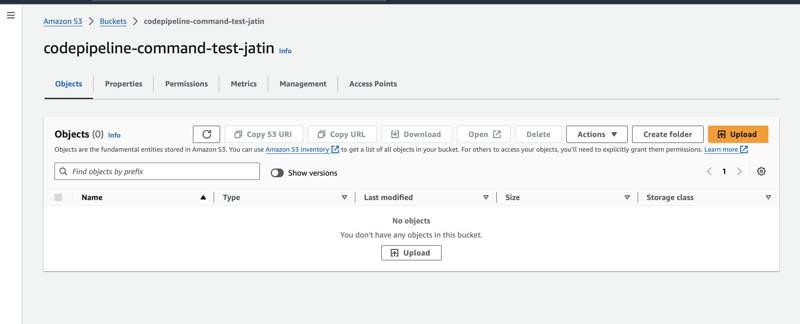

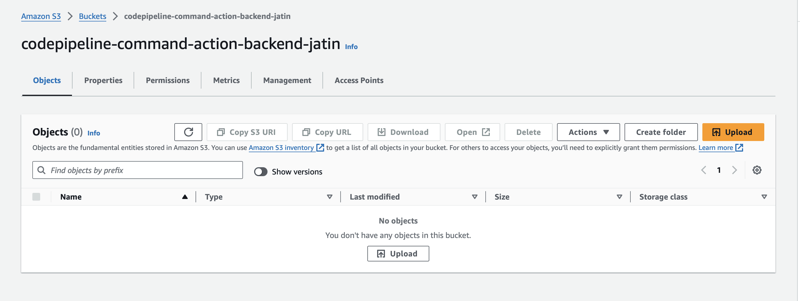

Step 1: Create S3 bucket for storing terraform backend and source

- Create

versioneds3 bucket to store our terraform zip ( source artifact) - Uploading to this bucket will trigger pipeline

- Create another

versioneds3 bucket to store terraform backend for its state.

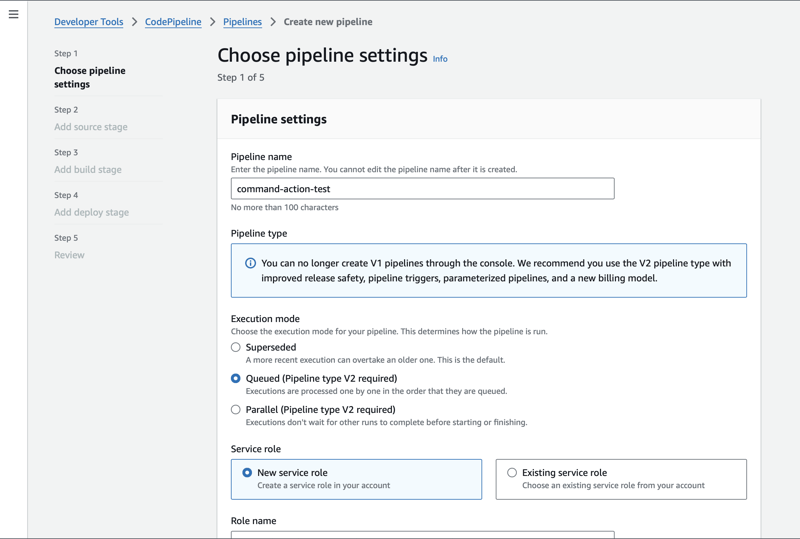

Step 2: Create Pipeline

- To keep this blog simple I am keeping everything default.

- Note I am using new service role as per docs it has the permissions for command action. If you are using existing role you need to add the permissions manually which I mentioned earlier.

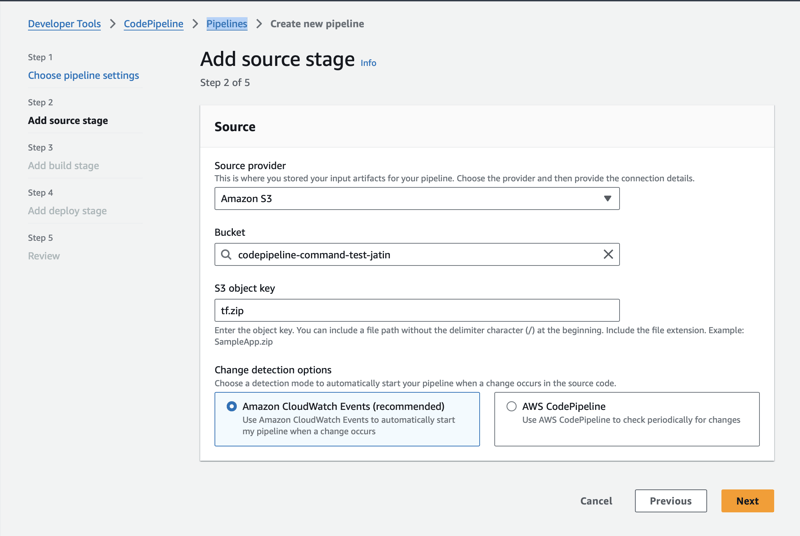

Step 3: Choose Source stage

- Choose the S3 bucket used in step 1

- Note the name of

S3 Object key:tf.zip. - of course you can choose any name

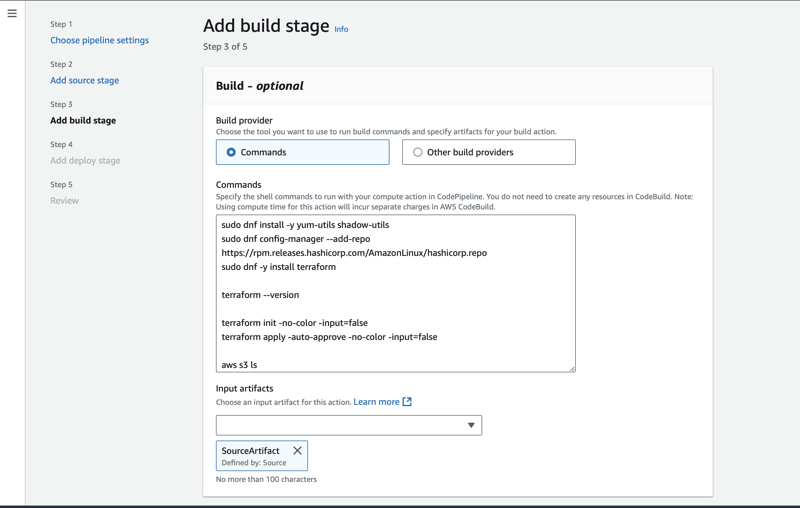

Step 4: Add build stage / add commands

- This is the most important step of our pipeline. The jist of this blog.

- Here in this following command I am doing 3 things

- Installing Terraform command to deploy terraform resources. Note use of dnf package manager as Amazon Linux 2023 uses dnf as its package manager

- using terraform init and apply

- Using aws command to list all s3 buckets in my account.

sudo dnf install -y yum-utils shadow-utils

sudo dnf config-manager --add-repo https://rpm.releases.hashicorp.com/AmazonLinux/hashicorp.repo

sudo dnf -y install terraform

terraform --version

terraform init -no-color -input=false

terraform apply -auto-approve -no-color -input=false

aws s3 ls

Note: Don’t add a white space between command other you will see an error for length constraint

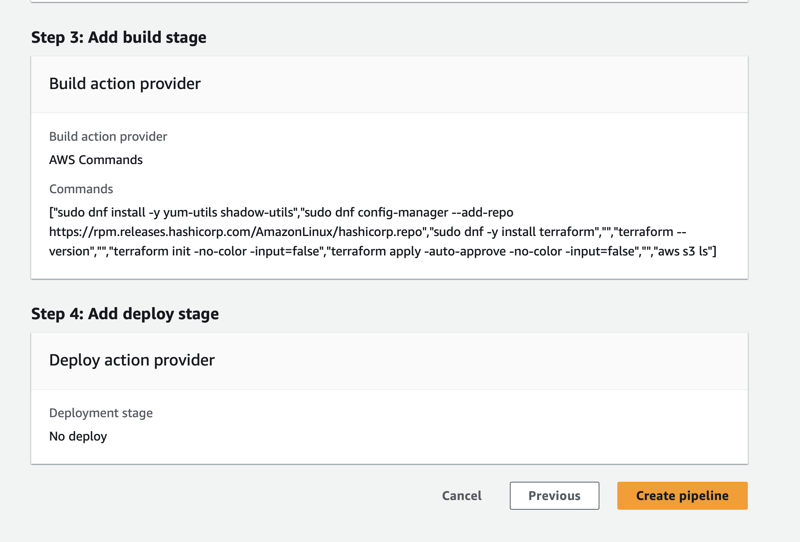

Step 5: Skip deploy and Review

- In the final stage skip deploy stage and review.

Voila, you just created a CD pipeline without even Knowing or involving Codebuild ( at least by your own)

Did it Actually work?

- Make the zip of terraform code

// optional step for backend

terraform {

backend "s3" {

bucket = "codepipeline-command-action-backend-jatin"

region = "us-east-1"

key = "terraform-deployment.tfstate"

}

}

resource "aws_s3_bucket" "deploy-bucket" {

bucket = "codepipeline-command-action-backend-1-jatin"

tags = {

Project = "For CI CD deploy test"

Environment = "Dev"

}

}

resource "aws_s3_bucket" "deploy-bucket-two" {

bucket = "codepipeline-command-action-backend-2-jatin"

tags = {

Project = "For CI CD deploy test"

Environment = "Dev"

}

}

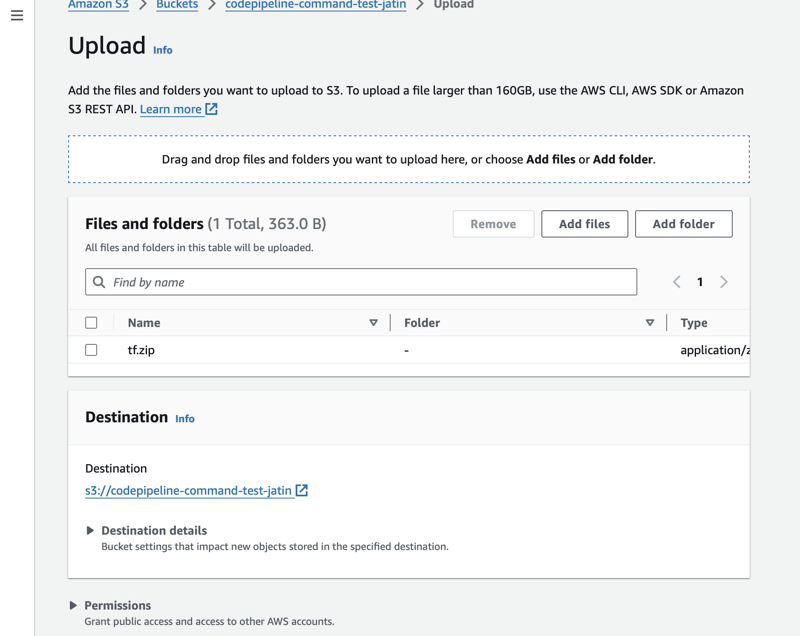

zip -r tf.zip main.tf

You also need to add the permission

(AmazonS3FullAccess)manually to Service role created by Codepipeline to allow Terraform running inside CodePipeline to upload terraform state to s3 bucket as we used terraform backend configuration.Upload Zip file to s3 bucket.

At this moment of the blog there is bug in the service role created by CodePipeline. It does not have permissions for Command Action.

- Manually add the permissions as discussed previously. For the purpose of blog I will attach

CloudWatchLogsFullAccessPolicy to the role

- This step is optional. Also add the permission to able to upload terraform state to s3 bucket

(AmazonS3FullAccess)as we used terraform backend configuration.

Check the CodePipeline Logs and s3 bucket

- We can clearly confirm terraform was able to create buckets and list buckets present in the account.

From DevOps Architect Perspective

With Commands Action I don’t need to touch or deal with CodeBuild action, Just focus on building my CI/CD Pipeline. But does it actually suffice for the abstraction?

- As per Docs CodePipeline Command action runs

CodeBuild managed on-demand EC2 computeso clearly we dont know the limits of memory, vCpus and Disk Space - If we are using CodeBuild we would have the freedom to choose the optimized flexibility during your build or even Lambda as compute for faster builds and deployments.

- Clearly this abstraction of

command actionis the tradeoff between Flexibility, cost savings, giving extra time to learn CodeBuild v/s simplicity, ease and time saving. - From cost perspective this abstraction should not be confused for “cheaper” CI CD pipelines.Running the commands action will incur separate charges in AWS CodeBuild.

I am very curious how The community will use this update for their Pipelines and how do they think it’s going to help them with their efficiency?

Leave your questions, comments and let’s make our life easy for CI CD pipelines. You can always reach out to me on Linkedin, X.